Influence Analysis for Performance Data

This was a project I had worked on as part of a team (at LLNL) that consisted of researchers from the Data Analysis and High Performance Computing (HPC) groups. The HPC team makes use of multiple applications that work in a high-dimensional space, each of which have different parameter settings that can be differently tweaked to solve the same problem, resulting in different performance characteristics (execution times) of the program. Additionally, running simulations of the program with all possible parameter settings (in order to measure performance) is expensive, primarily due to most of the parameters being discrete in nature, with multiple possible levels. Given this context, their primary problems/questions were three-fold:

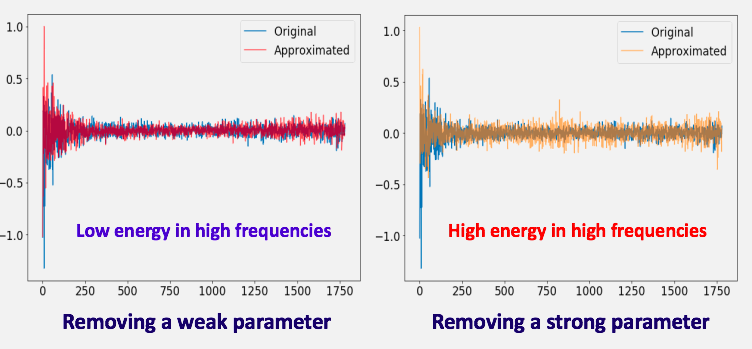

- Global parameter influence analysis: How can the overall influence of each of these parameters on the execution time of a program be quantified? i.e., identify the most and least influential parameters

- Subdomain analysis: How does influence vary across different subdomains of the parametric space?

- Enhancing predictive power with less data: Can the execution time of a program for all different parameter combinations be predicted accurately with only x% of the entire data (x ideally being much smaller than 100)?

Furthermore, interpretibility of the predictive model was a highly desired trait. We hence implemented a novel, interpretable, model-agnostic influence analysis using a relatively emerging field of Machine Learning called Graph Signal Processing.

A poster summarizing our findings and methodology was presented at the premier Supercomputing conference SC17. The poster can be viewed/downloaded below: